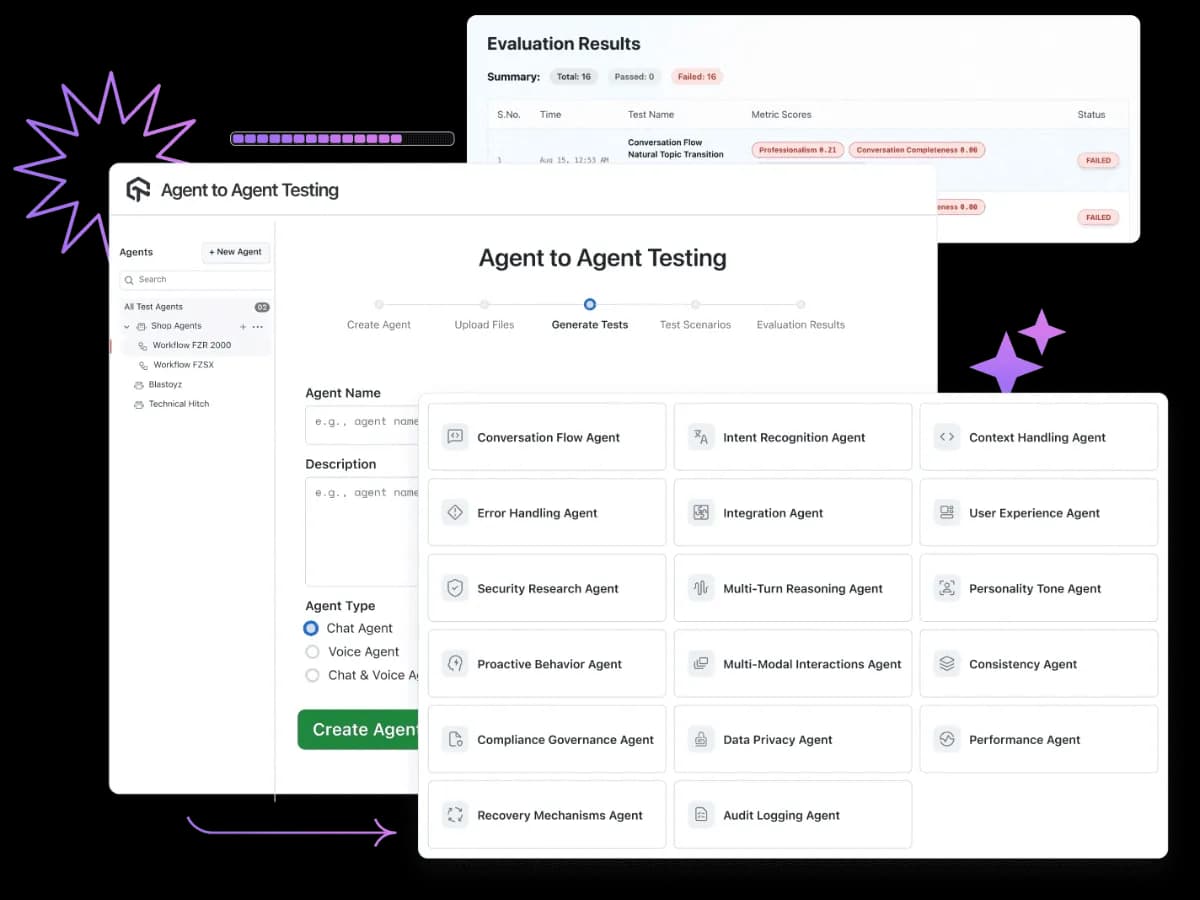

Agent to Agent Testing

Agent-to-Agent Testing validates agent behavior across chat, voice, phone, and multimodal systems, detecting security and compliance risks.

About Agent to Agent Testing

Agent-to-Agent Testing is a first-of-its-kind AI-native quality and assurance framework built to validate how AI agents behave in real-world environments. As agentic AI systems become more autonomous and unpredictable, traditional QA models, designed for static software, fail to keep pace.

Agent-to-Agent Testing goes beyond prompt-level validation to evaluate complete, multi-turn conversations across chat, voice, phone, and multimodal experiences. It enables enterprises to test AI chatbots, voice assistants, phone agents, and virtual support agents for hallucinations, bias, accuracy, security risks, and compliance readiness before production rollout.

Agent-to-Agent Testing Key Features

Agent-to-Agent Testing addresses the core limitations of traditional and low-code testing approaches by introducing a dedicated assurance layer for AI behavior.

- Multi-Agent Test Generation

Generate diverse test scenarios using 17+ specialized AI agents. This uncovers long-tail failures, edge cases, and interaction patterns that manual scripts and prompt testing consistently miss.

- Autonomous Synthetic User Testing

Simulate thousands of production-like user interactions at scale. Built-in validation checks for traceability, policy violations, escalation paths, and agent-to-agent handoffs.

- Unified Scoring Across Chat and Voice

Evaluate AI performance across chat and voice channels using a single, standardized scoring framework. Ensure consistent benchmarking across releases and experiences.

- Advanced Voice Simulation

Test voice and phone agents using 200+ simulated voices, accents, and 20+ background conditions, including noise, latency, translation errors, and poor connections.

- Persona-Based Behavioral Testing

Validate AI agents against 25+ pre-built personas such as anxious callers, hesitant users, or assertive conversationalists. Create custom personas to reflect business-specific behavior.

- Behavioral Edge-Case Testing

Stress test AI agents against interruptions, hesitations, off-script queries, and unexpected inputs to ensure reliable conversation flow under real-world pressure.

- AI-Specific Quality Metrics

Measure AI systems using modern metrics including hallucination risk, bias detection, accuracy, completeness, and context awareness.

- Quality Analytics & Executive Visibility

Track pass/fail trends, scenario performance, and quality signals through unified dashboards designed for engineering and leadership teams.

- Quality Analytics & Executive Visibility

- AI-Specific Quality Metrics

- Behavioral Edge-Case Testing

- Persona-Based Behavioral Testing

- Advanced Voice Simulation

- Unified Scoring Across Chat and Voice

- Autonomous Synthetic User Testing

Added by

Sarah ElsonSubmit Your Tool

Get featured on toolfio and reach thousands of potential users

Submit Now- •Dofollow backlinks

- •Lifetime listing

- •Starting from $0

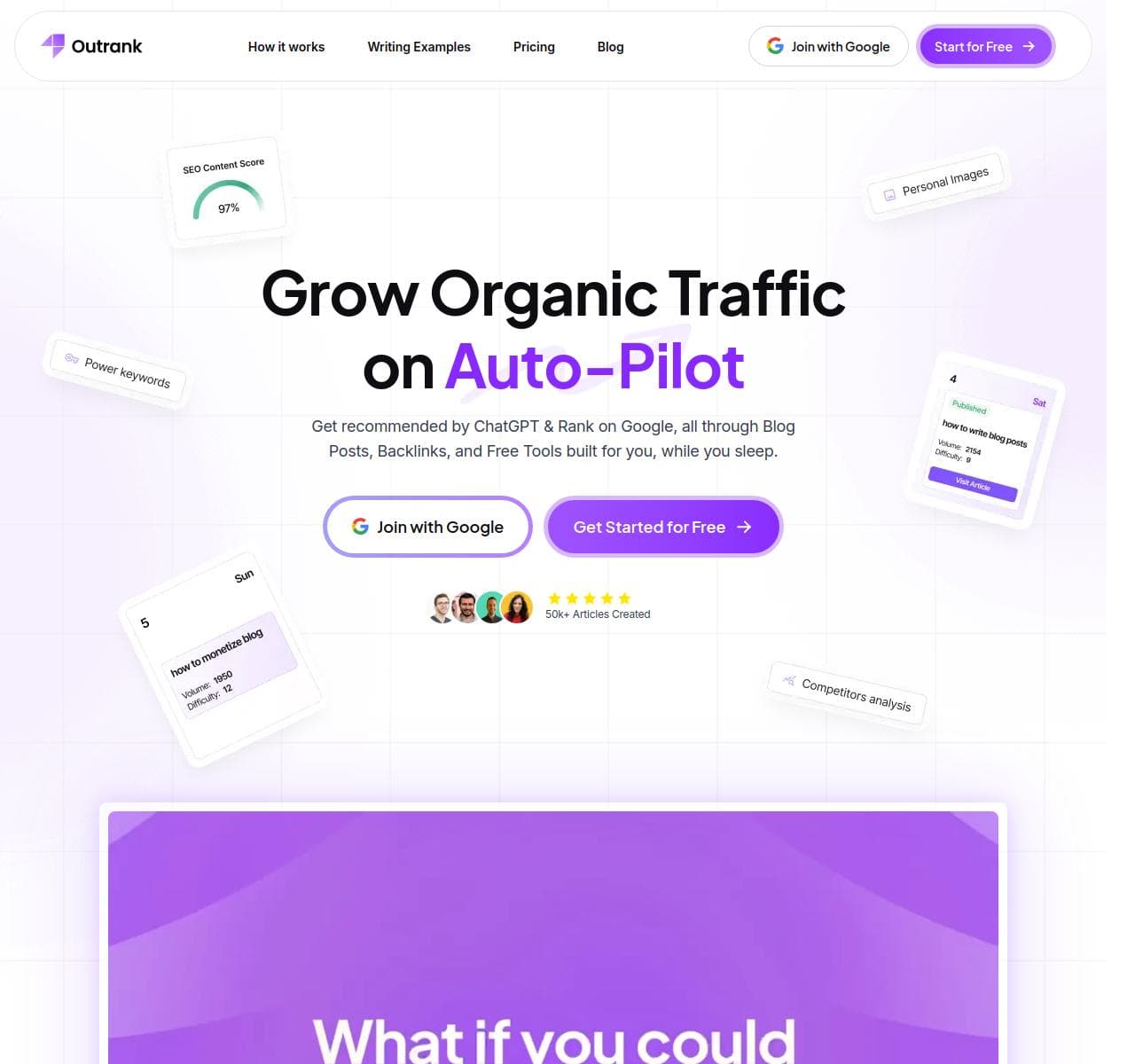

Outrank

An AI-powered platform that researches keywords, writes SEO-optimized long-form content, and publishes it directly to your website.

LaunchDirectories

LaunchDirectories.com is a curated list of 100+ verified startup directories that help indie hackers and founders promote launches, boost SEO, and gain early traction.

vaethat

Vaethat is an AI tool for archviz professionals to upscale 3D renders, refine details, and improve clarity while preserving design

Glossa

Accurate and fast AI translation of your sermons into 100+ languages. Available for only $5/hour/language. Reach international community in your city.

AI Best

AI Best - AI Image & Video Generation Platform | Text to Image, Image to Image, Video Generation